In the tangled web of digital visibility, the visibility of your website is crucial to getting your message to the right customers. One of the main tools you have to understand how Google’s search algorithms interact with your website is Google Search Console. In its plethora of features, Crawl reports are a valuable resource that provides insight into how Google’s bots see the web pages and interact with site pages.

What are Google Search Console Crawl Reports

Crawl reports on Google Search Console favour comprehensive information on how Google’s web crawlers, frequently called “Googlebot,” navigate your site. These reports contain vital information on the specific web pages Google is crawling, how often it visits them, and if there are any issues encountered during the process. By analyzing these reports, webmasters and website owners can correct possible problems that could affect the visibility of their websites in search payoffs.

The most critical components in Crawl Reports

The Crawl Status section gives the Googlebot’s latest actions on your website. It provides metrics like the number of pages that were crawled, the duration of crawling, and any significant differences from previous times.

Crawl Errors: Below, you’ll be able to see a breakdown of the errors that Googlebot encounters Googlebot while it crawls your site. The errors can be anything from pages that aren’t identified (404 errors) or server errors (5xx errors) that can adversely affect your site’s rank when left unresolved.

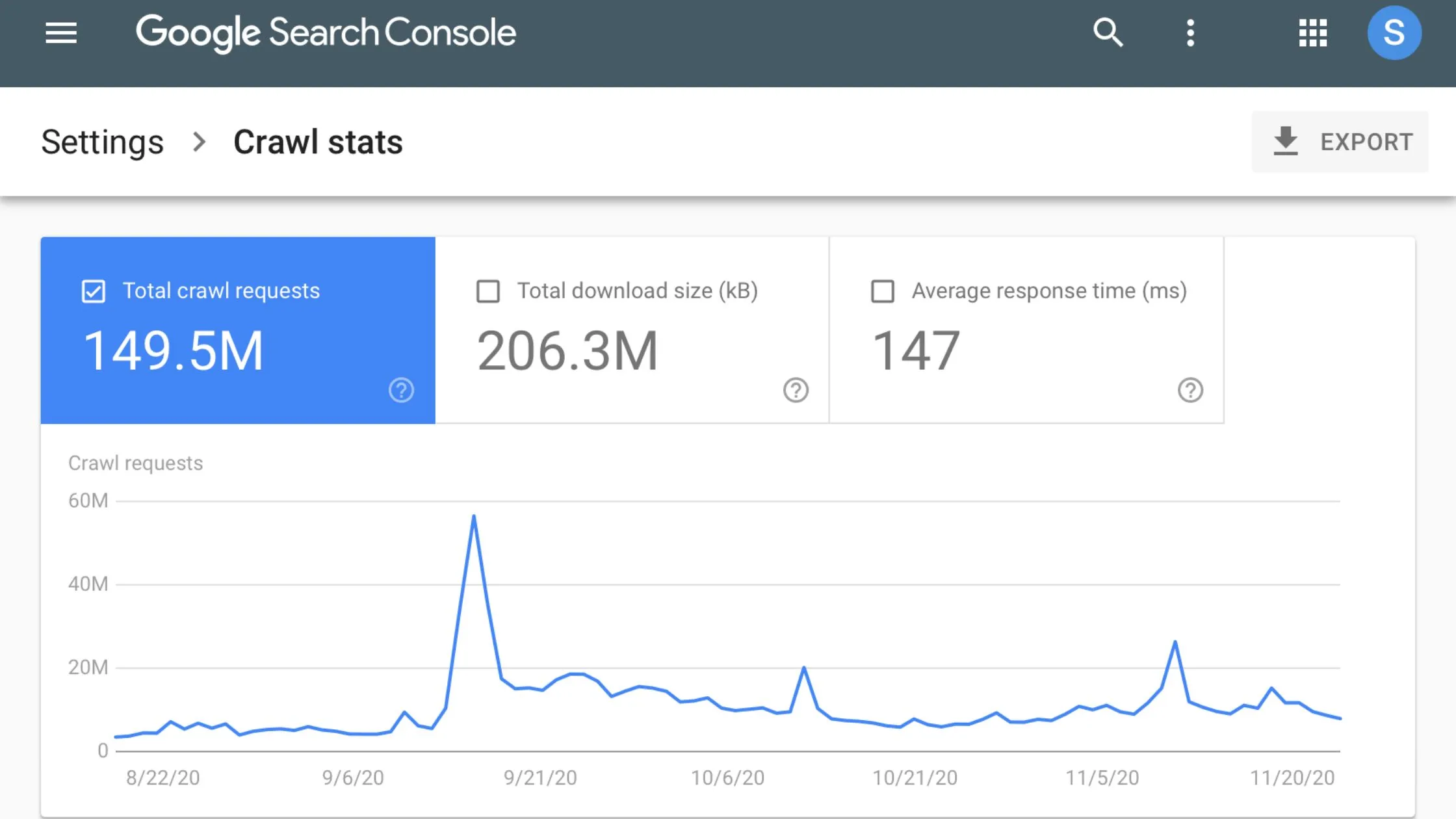

Crawl Stats : The following section gives precise statistics on Googlebot’s search behaviour over time. You can monitor metrics such as the amount of pages accessed daily, the time it takes to download pages, and the time it takes to respond to your servers. Knowing these statistics will improve your site’s performance and ensure effective crawling.

URL Inspection Tool: Although not technically part of crawl reports, The URL Inspection tool inside Google Search Console allows you to examine the status of indexing and the extent of coverage for individual pages on your website. You can request the indexing of specific URLs, resolve indexing issues, or view any improvements or errors discovered during the crawling process.

What’s the significance of Crawl Reports?

Reports on Crawls for identifying issues provide early warning systems that alert users to any problems that could impact the visibility of your website on search payoff. If it’s broken links, server errors, or a problem in the structure of your website, prompt detection and resolution could prevent significant interruptions to the organic traffic.

Enhancing Performance: By studying crawl data, you receive insight into how Googlebot sees your website. This helps you make educated decisions regarding improving your website’s structure, content, and performance to improve the crawlability of your site and the overall user experience.

Enhancing Indexation: Ensuring Googlebot can index and crawl your site efficaciously is crucial to maximizing your site’s visibility in the search payoff. The Crawl reports benefit you by helping you find pages that might be hindered from indexing or exhibit issues with indexing. This allows you to take appropriate actions to increase the indexing of your website.

Monitoring Changes: Regularly checking crawl reports lets you monitor changes in the behaviour of Googlebot and uncover any trends or patterns that might affect your website’s performance. If there’s an abrupt boost in the number of errors crawlers make or a decline in crawl activity, remaining alert allows you to tackle problems quickly and ensure the health of your website.

Conclusion

Google Search Console crawl report favours webmasters and owners of websites with invaluable insights into how Google’s robots crawl and index their websites. Utilizing these reports effectively can help you find and fix issues and optimize your site to increase its performance and eventually improve its visibility in results. Outcome. Regular monitoring and analysing crawl data are crucial elements of a comprehensive SEO strategy that helps stay ahead of the constantly changing world of search engine optimization.